Intro

In this post, I’m looking at setting up a local Minikube cluster to develop and test applications (test-/dev-environment) before deploying them to a cloud cluster (prod-environment). This approach makes sense to save resources if such an issue is on the table. Development and testing would involve no costs (to the cloud provider).

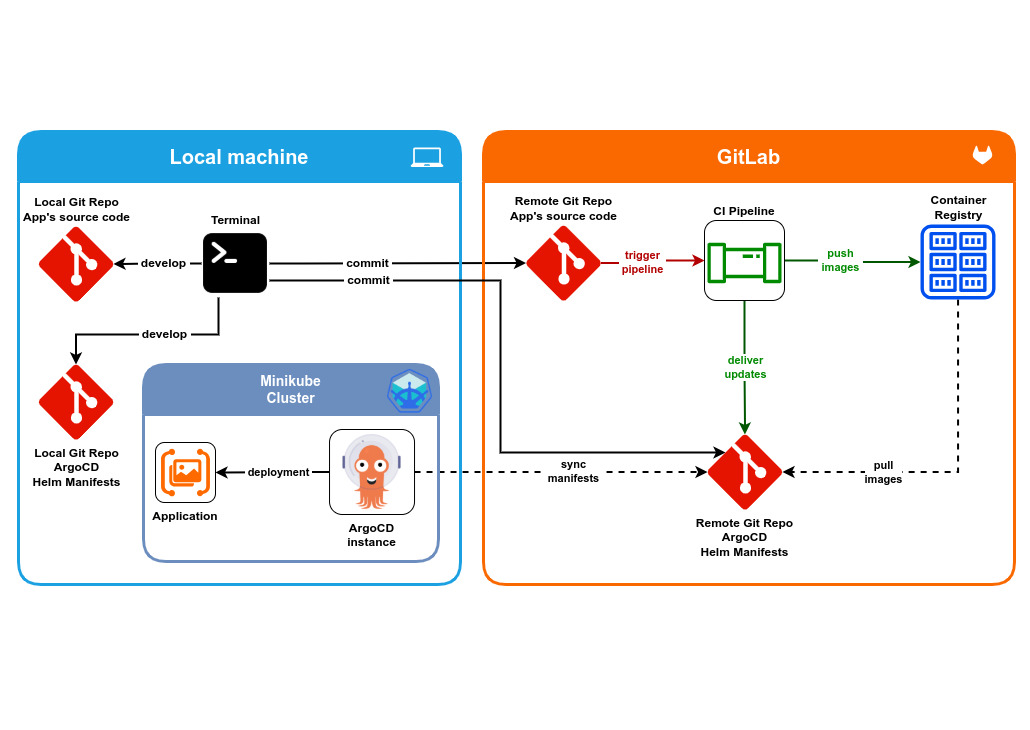

Infrastructure overview

This is the reference scheme that I will be following. There are two main entities, the local machine and the GitLab account:

- On the local machine there is a running Minikube cluster with an instance of ArgoCD

- On the GitLab side there is a CI-pipeline and a container registry.

Local application repository

Here I am not focusing on the application itself, but on the infrastructure around it. So, this application itself is not ready to be used as a full-fledged web application. Currently there is only a simple Flask web-server which is not yet available.

The contents of app.py:

from flask import Flask

app = Flask(__name__)

@app.route("/")

def entry():

return "PerceptiView"

if __name__ == "__main__":

app.run(host='0.0.0.0')

The contents of Dockerfile:

FROM python:3.12-slim-bullseye WORKDIR /usr/src/app COPY requirements.txt . RUN pip install -r requirements.txt COPY . . CMD ["python", "app.py"]

The contents of .gitlab-ci.yaml:

workflow:

rules:

- changes:

- src/**/*

stages:

- build

- deploy

variables:

IMAGE_NAME: ez-machine-learning/perceptiview

FULL_NAME: $CI_REGISTRY/$IMAGE_NAME:$CI_COMMIT_SHORT_SHA

build-app:

image: docker

stage: build

services:

- docker:dind

script:

- docker login -u $CI_REGISTRY_USER -p $CI_REGISTRY_PASSWORD $CI_REGISTRY

- docker build -t $FULL_NAME .

- docker push $FULL_NAME

deploy-app:

stage: deploy

image: ubuntu:22.04

before_script:

- 'which ssh-agent || ( apt-get update -y && apt-get install openssh-client git -y )'

- mkdir -p /root/.ssh

- echo "$ARGOCD_SSH_KEY" > /root/.ssh/perceptiview_rsa

- chmod 600 /root/.ssh/perceptiview_rsa

- ssh-keyscan -H gitlab.com >> ~/.ssh/known_hosts

- chmod 644 ~/.ssh/known_hosts

# run ssh-agent

- eval $(ssh-agent -s)

# add ssh key stored in ARGOCD_SSH_KEY variable to the agent store

- echo "$ARGOCD_SSH_KEY" | tr -d '\r' | ssh-add -

# Git

- git config --global user.email "gitlab-ci@gmail.com"

- git config --global user.name "gitlab-ci"

- git clone $ARGOCD_REPO

- cd son-of-argus

- ls -latr

script:

# Update Image TAG

- sed -i "s/perceptiview:.*/perceptiview:${CI_COMMIT_SHORT_SHA}/g" perceptiview/values.yaml

- git add perceptiview/values.yaml

- git commit -am "PerceptiView image update - tag ${CI_COMMIT_SHORT_SHA}"

- git push

The CI pipeline is triggered only if there are changes in the src folder.

Local manifests repository

This repo contains Helm manifests for the application. They will be triggered by ArgoCD to deploy the application and pull updates, if any.

Helm templates:

- Deployment with the app’s image

- The secret to accessing an application’s container registry

The contents of deployment.yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: {{ .Values.label }}

name: perceptiview

namespace: {{ .Values.namespace }}

spec:

replicas: {{ .Values.image.replicaCount }}

selector:

matchLabels:

app: {{ .Values.label }}

template:

metadata:

labels:

app: {{ .Values.label }}

spec:

containers:

- image: {{ .Values.image.name }}

name: perceptiview

imagePullSecrets:

- name: {{ .Values.secrets.registry }}

The contents of registry-access.yaml:

apiVersion: v1

data:

.dockerconfigjson: CONTAINER_REGISTRY_SECRET

kind: Secret

metadata:

name: {{ .Values.secrets.registry }}

namespace: {{ .Values.namespace }}

type: kubernetes.io/dockerconfigjson

Minikube setup

First one needs to install Docker, Helm and Minikube.

Then start minikube:

minikube start --driver=virtualbox

ArgoCD setup

Deploy ArgoCD in the minikube cluster.

Edit the service argocd-server and set the type to NodePort:

minikube kubectl -- edit svc argocd-server -n argocd

Verify that the changes have been accepted:

Minikube tunnel

The argocd CLI can be installed to configure new users and their rights. The password for the administrator can be found in argocd-initial-admin-secret.

ArgoCD instance

Once the tunnel is established, one can access the ArgoCD instance through a browser:

There are no applications at this time:

In order for ArgoCD to retrieve Helm manifests from the repository, a connection must be established with it:

An application can now be added by clicking on + NEW APP.

ArgoCD will open a window that needs to be filled with values to run the application:

In the end press on the button Create (top left corner in the first image).

Note:

The app is “green” here because it has already successfully gone through the pipeline.

If there are no “runs” at the time the application is created, the ArgoCD application will generate an error depending on the readiness of the manifests at that moment.

Remote application and remote manifests repositories

These repositories are the same as those on the local machine.

Deploy tokens

In order to pull images from the repo’s container registry, the deployment manifest has to gain access to it. Using username and password for the GitLab account itself is a bad idea and poses certain risk due to two reasons:

- Credentials are stored encoded in Secret but not encrypted

- Potential leak of these credentials grants access to the whole account

RBAC provides an extra layer of security, but the mere fact that these credentials give you access to everything should outweigh the other reasons.

The solution is to provide granular access to the application’s deployment. In this case, access is granted only to the application’s container registry, and in the worst case scenario, even if the token is somehow exposed, the only thing that can be compromised is the contents of that registry. The account owner would be able to simply delete the exposed token, remove compromised docker images and build new ones. In addition, when creating a token, user must define the scopes. In this case, read-only permissions are granted here.

References

- Mirrored repo «Son of Argus»

- Installing Minikube on Ubuntu 20.04 LTS (Focal Fossa)

- Installing Minikube on Linux

- How to Install Helm on Ubuntu, Mac and Windows

- Helm releases

- Install Docker Engine

- Minikube. Drivers. VirtualBox

- ArgoCD. Getting Started. Installation guide for minikube

- GitOps: Building and Deploying Applications on Kubernetes with GitLab CI/CD, Helm Charts, and ArgoCD

- Private Repositories

- Use SSH keys to communicate with GitLab

- ArgoCD

imagePullSecrets - Creating a Docker Image for a Simple python-flask “hello world!” application.

- mkaraminejad / cicd_pipeline / 2-AgroCD