Current plan

At this stage I am going to do the following:

- Add Helm release and additional resources for AWS LBC

- Add Helm release for ArgoCD and ingress

- Add GitLab CI-pipeline

- Retrieving a Load Balancer Hosted Zone ID

AWS LBC Helm release

There are many ways to deploy a Helm chart, and in the end I chose this one – let the release itself do everything for me, and I’ll just provide it with the required values. Like I mentioned earlier, I prefer when everything is in one place, so here all the values are just added together in a chart, and there is no need to forward values from main.tf to Helm Charts.

Helm chart for AWS LBC – application version v2.10.1, chart version 1.10.1

resource "helm_release" "aws_lbc" {

count = var.create_lbc ? 1 : 0

name = "aws-load-balancer-controller"

repository = "https://aws.github.io/eks-charts"

chart = "aws-load-balancer-controller"

namespace = "kube-system"

version = var.aws_lbc_helm_chart_version

values = [

<<-EOT

serviceAccount:

create: true

name: ${var.aws_lbc_sa}

annotations:

eks.amazonaws.com/role-arn: ${aws_iam_role.aws_lbc[0].arn}

rbac:

create: true

enableServiceMutatorWebhook: false

region: ${var.region}

vpcId: ${aws_vpc.aws-vpc.id}

clusterName: ${aws_eks_cluster.eks.name}

EOT

]

depends_on = [

aws_eks_cluster.eks,

aws_eks_node_group.general

]

}

ArgoCD Helm release

I am currently using the following Helm chart for ArgoCD – application version v2.13.3, chart version 7.7.14.

resource "helm_release" "argocd" {

name = "argocd"

repository = "https://argoproj.github.io/argo-helm"

chart = "argo-cd"

namespace = "argocd"

create_namespace = true

version = var.argocd_helm_chart_version

values = [

<<-EOT

global:

domain: ${var.argocd_hostname}

configs:

params:

server.insecure: true

server:

ingress:

enabled: true

controller: aws

ingressClassName: alb

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/backend-protocol: HTTP

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":80}, {"HTTPS":443}]'

alb.ingress.kubernetes.io/ssl-redirect: '443'

alb.ingress.kubernetes.io/load-balancer-name: ez-lb

alb.ingress.kubernetes.io/certificate-arn: arn:aws:acm:${var.region}:${data.aws_caller_identity.current.account_id}:certificate/${var.argocd_certificate}

aws:

serviceType: ClusterIP

backendProtocolVersion: GRPC

EOT

]

depends_on = [

helm_release.aws_lbc

]

}

Here for the annotation alb.ingress.kubernetes.io/certificate-arn the certificate was created manually in advance in the Certificate Manager (validated by Email):

Checking the availability of ArgoCD instance:

kubectl port-forward svc/argocd-server -n argocd 8080:443

Repositories access

To provide access to the repositiories with all Helm charts deploy keys were used. These keys are deployed within Kubernetes Secrets:

resource "kubernetes_secret_v1" "repo_1_connect_ssh_key" {

count = var.create_repo_1_connection_key_secret ? 1 : 0

metadata {

name = var.repo_1_name

namespace = "argocd"

labels = {

"argocd.argoproj.io/secret-type" = "repository"

}

}

data = {

type = "git"

url = var.repo_1_url

sshPrivateKey = base64decode(var.repo_1_ssh_key)

}

}

resource "kubernetes_secret_v1" "repo_2_connect_ssh_key" {

count = var.create_repo_2_connection_key_secret ? 1 : 0

metadata {

name = var.repo_2_name

namespace = "argocd"

labels = {

"argocd.argoproj.io/secret-type" = "repository"

}

}

data = {

type = "git"

url = var.repo_2_url

sshPrivateKey = base64decode(var.repo_2_ssh_key)

}

}

GitLab CI-pipeline

I need to install the AWS CLI on GitLab Runner because ArgoCD LoadBalancer is installed via Helm Chart, not via a separate Terraform resource aws_lb, so there is no way to extract the Hosted Zone ID1 for the created LB via Terraform’s built-in tools. The LB’s Hosted Zone ID is required for its binding to the Route53 record argocd.elenche-zetetique.com

So in this case I have to use a workaround and run a CLI command via the resource local-exec to retrieve the Hosted Zone ID and then add it to the Route53 record.

stages:

- preflight

- plan

- apply

- destroy

variables:

PATH_TO_SCRIPT: "remote"

MAIN_PATH: "${CI_PROJECT_DIR}/${PATH_TO_SCRIPT}"

TF_VAR_argocd_certificate: ${argocd_certificate}

TF_VAR_argocd_helm_chart_version: ${argocd_helm_chart_version}

TF_VAR_argocd_hostname: ${argocd_hostname}

TF_VAR_argocd_lb_name: ${argocd_lb_name}

TF_VAR_AWS_DEFAULT_REGION: ${AWS_DEFAULT_REGION}

TF_VAR_aws_eks_cluster_name: ${aws_eks_cluster_name}

TF_VAR_aws_eks_cluster_version: ${aws_eks_cluster_version}

TF_VAR_aws_lbc_helm_chart_version: ${aws_lbc_helm_chart_version}

TF_VAR_CI_PROJECT_ID: ${CI_PROJECT_ID}

TF_VAR_developer_user: ${developer_user}

TF_VAR_env: ${env}

TF_VAR_hosted_zone_id: ${hosted_zone_id}

TF_VAR_local_file_elb_hosted_zone_id: ${local_file_elb_hosted_zone_id}

TF_VAR_manager_user: ${manager_user}

TF_VAR_repo_1_name: ${repo_1_name}

TF_VAR_repo_1_ssh_key: ${repo_1_ssh_key}

TF_VAR_repo_1_url: ${repo_1_url}

TF_VAR_repo_2_name: ${repo_2_name}

TF_VAR_repo_2_ssh_key: ${repo_2_ssh_key}

TF_VAR_repo_2_url: ${repo_2_url}

TF_VAR_script_elb_hosted_zone_id: ${script_elb_hosted_zone_id}

image:

name: "$CI_TEMPLATE_REGISTRY_HOST/gitlab-org/terraform-images/releases/1.4:v1.0.0"

cache:

key: "${CI_PROJECT_ID}"

paths:

- $PATH_TO_SCRIPT/.terraform/

default:

before_script:

- cd ${MAIN_PATH}

- >

gitlab-terraform init

-backend-config="address=https://gitlab.com/api/v4/projects/$CI_PROJECT_ID/terraform/state/$TF_STATE_NAME"

-backend-config="lock_address=https://gitlab.com/api/v4/projects/$CI_PROJECT_ID/terraform/state/$TF_STATE_NAME/lock"

-backend-config="unlock_address=https://gitlab.com/api/v4/projects/$CI_PROJECT_ID/terraform/state/$TF_STATE_NAME/lock"

-backend-config="username=$GL_USERNAME"

-backend-config="password=$TF_ACCESS_TOKEN"

-backend-config="lock_method=POST"

-backend-config="unlock_method=DELETE"

-backend-config="retry_wait_min=5"

preflight-tf-script:

stage: preflight

when: manual

script:

- cd ${MAIN_PATH}

- gitlab-terraform validate

plan-tf-script:

stage: plan

when: manual

script:

- cd ${MAIN_PATH}

- gitlab-terraform plan

- gitlab-terraform plan-json

needs:

- job: preflight-tf-script

artifacts:

paths:

- $PATH_TO_SCRIPT/plan.cache

reports:

terraform: $PATH_TO_SCRIPT/plan.json

apply-tf-script:

stage: apply

when: manual

script:

# Install AWS CLI v2

- curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

- unzip awscliv2.zip

- ./aws/install

- rm awscliv2.zip

- rm -rf ./aws

# Install JQ

- apt-get install -y jq

# Apply TF script

- cd ${MAIN_PATH}

- gitlab-terraform apply -auto-approve

needs:

- job: plan-tf-script

environment:

name: $TF_STATE_NAME

destroy-tf-script:

stage: destroy

when: manual

script:

- cd ${MAIN_PATH}

- gitlab-terraform destroy

needs:

- job: apply-tf-script

environment:

name: $TF_STATE_NAME

All variables in the TF-script that should be hidden have been moved to CI/CD variables (Settings -> CI/CD -> Variables) and added to the variables in the pipeline with TF_VAR_ prefix.

Retrieving a Load Balancer Hosted Zone ID

To be honest, I didn’t expect that I would have to go to such trouble to extract just ONE parameter I need. The excerpt of code below allows to retrieve the hosted zone ID of an ArgoCD load balancer after it is deployed and bind it to the Route53 record at the time of its creation. I added a resource time_sleep in order to give reasonable amount of time to ArgoCD load balancer to be deployed.

resource "time_sleep" "wait_10_minutes" {

create_duration = "10m"

destroy_duration = "30s"

depends_on = [helm_release.argocd]

}

data "kubernetes_ingress_v1" "argocd_ingress" {

count = var.deploy_data_kubernetes_ingress_v1_argocd_ingress ? 1 : 0

metadata {

name = "argocd-server"

namespace = "argocd"

}

depends_on = [time_sleep.wait_10_minutes]

}

resource "null_resource" "extract_elb_hosted_zone_id" {

count = var.deploy_terraform_data_elb_hosted_zone_id ? 1 : 0

provisioner "local-exec" {

command = "/bin/bash ${var.script_elb_hosted_zone_id} ${var.argocd_lb_name} ${var.local_file_elb_hosted_zone_id}"

}

depends_on = [time_sleep.wait_10_minutes]

}

data "local_file" "elb_hosted_zone_id" {

count = var.deploy_local_file_elb_hosted_zone_id ? 1 : 0

filename = "${var.local_file_elb_hosted_zone_id}"

depends_on = [null_resource.extract_elb_hosted_zone_id]

}

resource "aws_route53_record" "argoce_record" {

count = var.deploy_argocd_route53_record ? 1 : 0

zone_id = "${var.hosted_zone_id}"

name = "${var.argocd_hostname}"

type = "A"

alias {

name = data.kubernetes_ingress_v1.argocd_ingress[0].status.0.load_balancer.0.ingress.0.hostname

zone_id = element(split("\n", data.local_file.elb_hosted_zone_id[0].content), 0)

evaluate_target_health = true

}

depends_on = [null_resource.extract_elb_hosted_zone_id]

}

The contents of the Bash script:

#!/bin/sh

# Accept input parameters

LOAD_BALANCER_NAME=$1

OUTPUT_FILE=$2

export ELB_HOSTED_ZONE_ID=$(aws elbv2 describe-load-balancers --output json | jq -r --arg LBNAME "${LOAD_BALANCER_NAME}" '.LoadBalancers[] | select(.LoadBalancerName == "\($LBNAME)") | .CanonicalHostedZoneId')

echo $ELB_HOSTED_ZONE_ID > "$OUTPUT_FILE"

The output txt-file contains only one line with LB’s Hosted Zone ID

(14 characters long ID):

HOSTEDXZONEXID

Deploying with pipeline

Pipeline lets deploy the infrastructure:

One can see that the load balancer is attached to the record for ArgoCD in Route53:

Before creating this subdomain, I added nameservers to the main domain. You can see below that there are now 6 servers on demand, while originally there were only two from Bluehost:

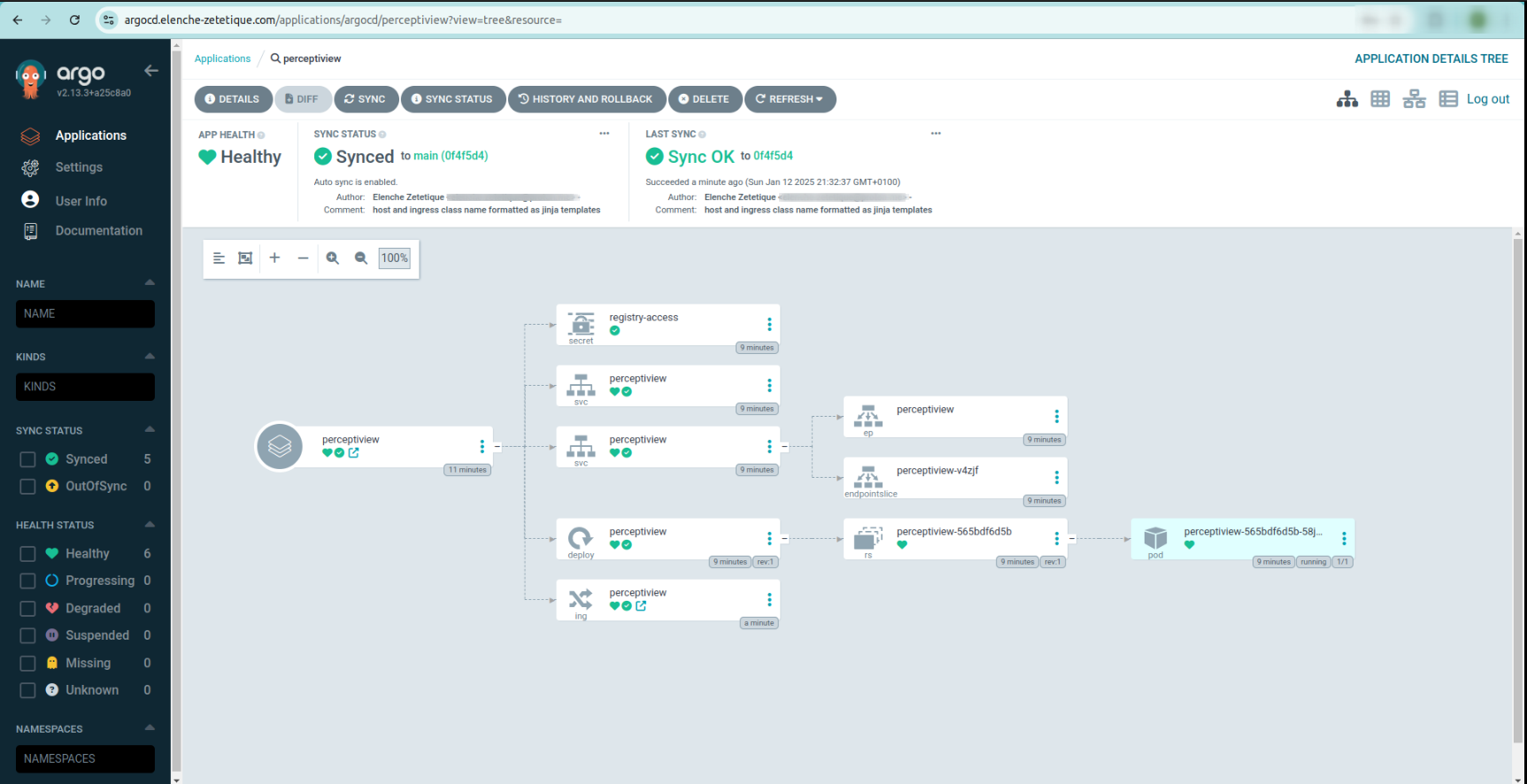

And finally an instance of ArgoCD is available under argocd.elenche-zetetique.com2:

- Not to be confused with the Hosted Zone ID for your Route53 records – LB is hosted in a separate zone, in no way related to yours. ↩︎

- Generally, you should not make this address globally accessible, as this can provide an additional attack vector for malicious actors and give them access to the infrastructure. This was more for demonstration purposes, and in all likelihood at the time you read this article, the site will not be available. ↩︎

References

- Mirrored repo «Son of Argus»

- Mirrored repo «Infrastellar»

- GitLab CI/CD Pipelines: Best Practices for Monorepos

- AWS Load Balancer Controller Tutorial (TLS): AWS EKS Kubernetes Tutorial – Part 6

- Navigating AWS EKS with Terraform: Understanding EKS Cluster Configuration

- Setting up EKS with Terraform, Helm and a Load balancer

- Setup ArgoCD using Terraform and ALB Controller

- Bluehost. How to Change Your Name Servers

- ArgoCD — Create credentials template via declarative configuration

- GitLab Deploy keys

- ArgoCD. Ingress Configuration

- ArtifactHub. Argo CD Chart

- ArtifactHub. AWS Load Balancer Controller

- Infrastructure provisioning in AWS with Terraform

- How to Implement GitLab CI/CD Pipeline with Terraform

- GitLab-managed Terraform/OpenTofu state